WebRTC 中有關 Media Stream & Track & Channel 之間的關係

有些接觸 webrtc 的朋友,對有些概念名詞和概念,以及它們之間的關係雲裡霧裡,常常被它們繞暈。下面我就帶大家理一理它們之間的關係。僅供參考!

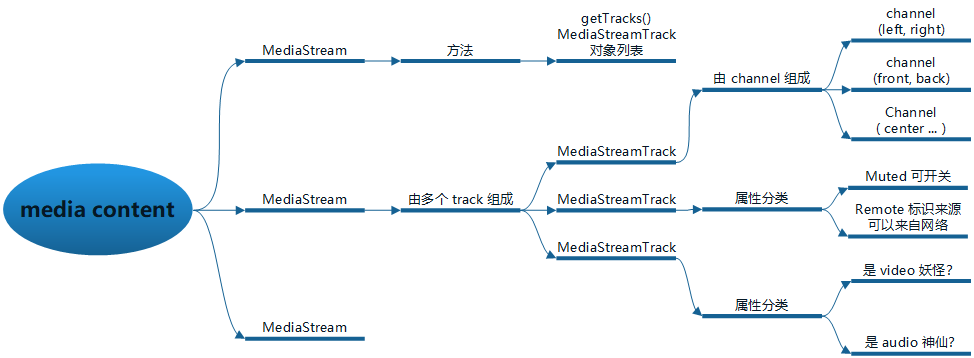

1. Media Content

我們看到所有釋出的媒體內容( Media Content ) 其實是由媒體流( Media Stream ) 組成的,一個 Media Content 最常見的展現形式就是一個音視訊檔案,形式各種各種,也可以包括一個場景。

是個廣義的定義。每個 Media Content 可以由一個或多個 Media Stream 組成,我們的音視訊檔案大部分包含一個 Media Stream。

2. Media Stream

Media Stream 是由一個或多個媒體流軌道 ( Media Stream Track ) 組成,一個 Media Stream Track 你可以理解為一個麥克風,一個攝像頭,當然 Media Stream 也可能來自網路的對端,因此

Media Stream Track 可以理解為一個網路 SOCKET ( 這裡比喻不太恰當,其實 Media Stream Track 的資料來自 SOCKET ) ,這樣更形象。

3. Media Stream Track

Media Stream Track 分為兩種型別,一個是音訊( audio ) , 一種是視訊 ( video )

一個或多個 Media Stream Track 可以組成一個 Media Stream, Media Stream Track 上面已有對應的描述,最直接的理解可以看成一個本地的輸入裝置,或者一個對等端資料的蒐集和處理單元,

更形象的是 SOCKET 的比喻。有些裝置是由多通道( channel )組成的,比如:音訊採集裝置,就有單聲道,立體聲,播放裝置就有前後左右,上下等方向上的聲音標識,這就是我們所說的通道,

視訊的通道,也是從不同角度對視訊的採集。至此,我們明白了一個 Media Stream Track 包含一個或多個通道,通道是裝置所附帶的屬性,不同裝置,定義不同。

在 webrtc 我們和對等端最常用的場景就是建立一個 Media Stream 進行互動,那麼就需要產生 video 和 audio 的 Media Stream Track ,有了這些我們只是有採集或渲染的部分了,整個通話

其實就是建立兩個 pipeline , 一個傳送, 一個接收。 我們呼叫 addTrack 介面就會繫結一個流,這個流底層就是負責 Media Stream Track 的傳送和接收,也就是上面說的 SOCKET 。具體怎麼把 track 與 stream 進行繫結,請參閱我其它部落格

http://my.oschina.net/u/4249347

下面是我查詢它們三者之間關係的描述資料,以饗讀者。

http://webrtc.org/getting-started/media-capture-and-constraints?hl=en

Streams and tracks

A MediaStream represents a stream of media content, which consists of tracks (MediaStreamTrack) of audio and video. You can retrieve all the tracks from MediaStream

by calling MediaStream.getTracks(), which returns an array of MediaStreamTrack objects.

MediaStreamTrack

A MediaStreamTrack has a kind property that is either audio or video, indicating the kind of media it represents. Each track can be muted by toggling its enabled property.

A track has a Boolean property remote that indicates if it is sourced by a RTCPeerConnection and coming from a remote peer.

http://developer.mozilla.org/en-US/docs/Web/API/Media_Streams_API

A MediaStream consists of zero or more MediaStreamTrack objects, representing various audio or video tracks. Each MediaStreamTrack may have one or more channels.

The channel represents the smallest unit of a media stream, such as an audio signal associated with a given speaker, like left or right in a stereo audio track.

MediaStream objects have a single input and a single output. A MediaStream object generated by getUserMedia() is called local, and has as its source input one of

the user's cameras or microphones. A non-local MediaStream may be representing a media element, like <video> or <audio>, a stream originating over the network,

and obtained via the WebRTC RTCPeerConnection API, or a stream created using the Web Audio API MediaStreamAudioSourceNode.

The output of the MediaStream object is linked to a consumer. It can be a media elements, like <audio> or <video>, the WebRTC RTCPeerConnection API or a Web Audio

API MediaStreamAudioSourceNode.

http://w3c.github.io/mediacapture-main/#mediastreamtrack

The two main components in the MediaStream API are the MediaStreamTrack and MediaStream interfaces. The MediaStreamTrack object represents media of a single type

that originates from one media source in the User Agent, e.g. video produced by a web camera. A MediaStream is used to group several MediaStreamTrack objects into

one unit that can be recorded or rendered in a media element.

Each MediaStream can contain zero or more MediaStreamTrack objects. All tracks in a MediaStream are intended to be synchronized when rendered. This is not a hard

requirement, since it might not be possible to synchronize tracks from sources that have different clocks. Different MediaStream objects do not need to be synchronized.

- WebRTC 中有關 Media Stream & Track & Channel 之間的關係

- smart_rtmpd 推流和拉流那些事

- 為什麼 RTP 的視訊的取樣率是 90kHz ?

- CDN的加速原理是什麼?

- smart_rtmpd 的 NAT 對映方式使用說明

- Mediasoup 雜談(待完善)

- C 獲取當前日期精確到毫秒的幾種方法

- webrtc 中怎麼根據 SDP 建立或關聯底層的 socket 物件?

- webrtc 資料接收流程圖解

- webrtc 有關 SDP 部分的解析流程分析

- smart rtmpd 推流 url 和拉流 url

- Windows 版本的 Webrtc 的編譯 ( 基於聲網映象 )